Detailed Workflow¶

This notebook walks you through a typical AutoEIS workflow, from data loading to visualization and model ranking. In summary, the steps covered in this notebook are:

Load EIS data

Preprocess EIS data (removing outliers, etc.)

Generate a pool of equivalent circuit models

Fit model parameters to the EIS data using Bayesian inference

Rank models based on goodness-of-fit and complexity

Visualize the results

Set up the environment¶

AutoEIS relies on EquivalentCircuits.jl package to perform the EIS analysis. The package is not written in Python, so we need to install it first. AutoEIS ships with julia_helpers module that helps to install and manage Julia dependencies with minimal user interaction. For convenience, installing Julia and the required packages is done automatically when you import autoeis for the first time. If you have Julia installed already (discoverable in system PATH), it’ll get detected and

used, otherwise, it’ll be installed automatically.

Note

If this is the first time you’re importing AutoEIS, executing the next cell will take a while, outputting a lot of logs. Re-run the cell to get rid of the logs.

[1]:

import ipywidgets as widgets

import matplotlib.pyplot as plt

import numpy as np

import numpyro

import seaborn as sns

from IPython.display import display

import autoeis as ae

ae.visualization.set_plot_style()

# Set this to True if you're running the notebook locally

interactive = True

Load EIS data¶

Once the environment is set up, we can load the EIS data. You can use `pyimpspec <https://vyrjana.github.io/pyimpspec/guide_data.html>`__ to load EIS data from a variety of popular formats. Eventually, AutoEIS requires two arrays: Z and freq. Z is a complex impedance array, and freq is a frequency array. Both arrays must be 1D and have the same length. The impedance array must be in Ohms, and the frequency array must be in Hz.

For convenience, we provide a function load_test_dataset() in autoeis.io to load a test dataset. The function returns a tuple of freq and Z.

[2]:

freq, Z = ae.io.load_test_dataset()

Note

If your EIS data is stored as text, you can easily load them using numpy.loadtxt. See NumPy’s documentation for more details.

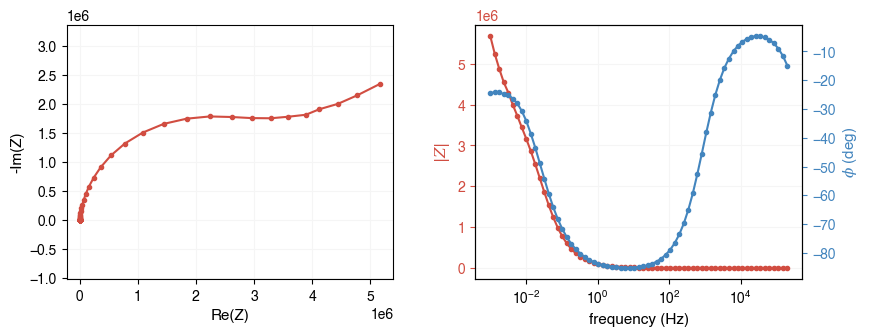

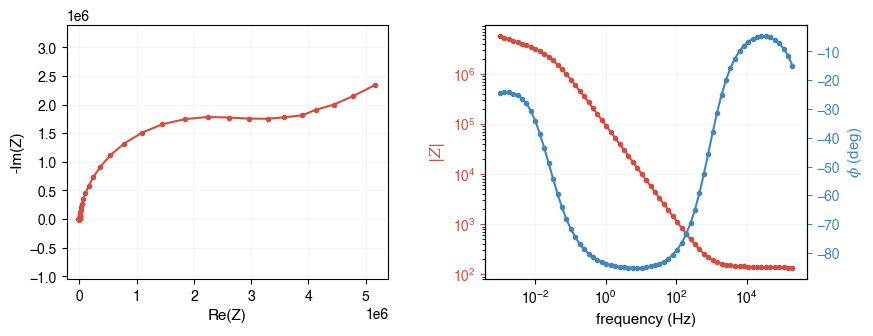

Now let’s plot the data using AutoEIS’s built-in plotting function plot_impedance_combo. The function takes the impedance array and the frequency array as inputs. It will plot the impedance spectrum in the Nyquist plot and the Bode plot. All plotting functions in AutoEIS can either be directly called or an Axes object can be passed in to specify the plotting location.

Alternatively, you can use separately call plot_nyquist and plot_bode functions to plot the Nyquist and Bode plots, in separate figures.

[3]:

ax = ae.visualization.plot_impedance_combo(freq, Z)

# Alternative way to plot the EIS data

# ax = ae.visualization.plot_nyquist(Z, fmt=".")

# ax.set_title("Nyquist plot")

# ax = ae.visualization.plot_bode(freq, Z)

# ax[0].figure.suptitle("Bode plot")

Note

When plotting EIS data, much information can be lost if plotted on a linear scale (especially at high frequencies). It is recommended to plot the data on a logarithmic scale. You can do this by simply passing log=True to the plotting functions.

[4]:

ax = ae.visualization.plot_impedance_combo(freq, Z, log=True)

Preprocess impedance data¶

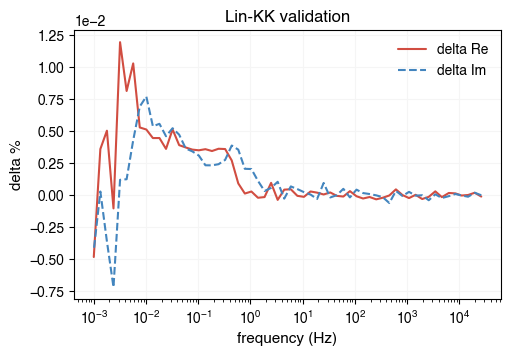

Before performing the EIS analysis, we need to preprocess the impedance data. The preprocessing step is to remove outliers. AutoEIS provides a function to perform the preprocessing. As part of the preprocessing, the impedance measurements with a positive imaginary part are removed, and the rest of the data are filtered using linear KK validation. The function returns the filtered impedance array and the frequency array.

[5]:

freq, Z, aux = ae.utils.preprocess_impedance_data(freq, Z, tol_linKK=5e-2, return_aux=True)

# NOTE: Since linKK could change `freq`, you should use `aux.freq` to plot the residuals

ae.visualization.plot_linKK_residuals(aux.freq, aux.res.real, aux.res.imag)

[22:31:31] WARNING 10% of data filtered out.

[5]:

<Axes: title={'center': 'Lin-KK validation'}, xlabel='frequency (Hz)', ylabel='delta %'>

Generate candidate equivalent circuits¶

In this stage, AutoEIS generates a list of candidate equivalent circuits using a customized genetic algorithm (done via the package EquivalentCircuits.jl). The function takes the filtered impedance array and the frequency array as inputs. It returns a list of candidate equivalent circuits. The function has a few optional arguments that can be used to control the number of candidate circuits and the circuit types. The default number of candidate circuits is 10, and the default circuit types

are resistors, capacitors, constant phase elements, and inductors. The function runs in parallel by default, but you can turn it off by setting parallel=false.

Note

Since running the genetic algorithm can be time-consuming, we will use a pre-generated list of candidate circuits in this demo to get you started quickly. If you want to generate the candidate circuits yourself, set use_pregenerated_circuits=False in the cell below.

[6]:

use_pregenerated_circuits = False

if use_pregenerated_circuits:

circuits_unfiltered = ae.io.load_test_circuits()

else:

kwargs = {

"iters": 12,

"complexity": 12,

"population_size": 100,

"generations": 30,

"terminals": "RLP",

"tol": 1e-3,

"parallel": True

}

circuits_unfiltered = ae.core.generate_equivalent_circuits(freq, Z, **kwargs)

# Since generating circuits is expensive, let's save the results to a CSV file

circuits_unfiltered.to_csv("circuits_unfiltered.csv", index=False)

# To load from file, uncomment the next 2 lines (line 2 is to convert str -> Python objects)

# circuits_unfiltered = pd.read_csv("circuits_unfiltered.csv")

# circuits_unfiltered["Parameters"] = circuits_unfiltered["Parameters"].apply(eval)

circuits_unfiltered

[6]:

| circuitstring | Parameters | |

|---|---|---|

| 0 | [R1,[L2,P3]]-L4-[P5-R6,[R7,P8]] | {'R1': 139.78551370647892, 'L2': 0.27203693370... |

| 1 | [R1,P2]-P3-[L4,R5]-[P6,R7] | {'R1': 2413079.958554732, 'P2w': 2.14144894920... |

| 2 | [R1,[P2-R3,R4-P5]-[R6,L7]-P8-R9] | {'R1': 7242850.786755418, 'P2w': 959679050.526... |

| 3 | [P1,[P2-R3,P4-R5]-L6] | {'P1w': 3.8117477836854603e-07, 'P1n': 0.15248... |

| 4 | [P1-L2,P3-R4] | {'P1w': 4.494992737332561e-07, 'P1n': 0.188965... |

| 5 | [P1,R2]-[R3,P4]-R5 | {'P1w': 3.407336521921721e-05, 'P1n': 0.999999... |

| 6 | R1-[R2-P3,P4-L5] | {'R1': 142.09211725762967, 'R2': 1832275.98713... |

| 7 | [R1,L2]-[P3,P4] | {'R1': 142.12388276711138, 'L2': 5.0, 'P3w': 4... |

| 8 | [P1-[[P2,L3],L4],P5] | {'P1w': 1.7254796027745665e-06, 'P1n': 0.96352... |

| 9 | [P1-L2,P3-R4] | {'P1w': 4.208902625324641e-07, 'P1n': 0.173613... |

| 10 | [P1,[L2,R3]-P4] | {'P1w': 4.0562852927697366e-07, 'P1n': 0.16648... |

| 11 | [[R1,P2],P3-R4] | {'R1': 979593243.2628126, 'P2w': 4.15796112284... |

Filter candidate equivalent circuits¶

Note that all these circuits generated by the GEP process probably fit the data well, but they may not be physically meaningful. Therefore, we need to filter them to find the ones that are most plausible. AutoEIS uses “statistical plausibility” as a proxy for gauging “physical plausibility”. To this end, AutoEIS provides a function to filter the candidate circuits based on some heuristics (read our paper for the exact steps and the supporting rationale).

[7]:

circuits = ae.core.filter_implausible_circuits(circuits_unfiltered)

# Let's save the filtered circuits to a CSV file as well

circuits.to_csv("circuits_filtered.csv", index=False)

# To load from file, uncomment the next 2 lines (line 2 is to convert str -> Python objects)

# circuits = pd.read_csv("circuits_filtered.csv")

# circuits["Parameters"] = circuits["Parameters"].apply(eval)

circuits

[7]:

| circuitstring | Parameters | |

|---|---|---|

| 0 | [P1,R2]-[R3,P4]-R5 | {'P1w': 3.407336521921721e-05, 'P1n': 0.999999... |

| 1 | R1-[R2-P3,P4-L5] | {'R1': 142.09211725762967, 'R2': 1832275.98713... |

Perform Bayesian inference¶

Now that we have narrowed down the candidate circuits to a few good ones, we can perform Bayesian inference to find the ones that are statistically most plausible.

[8]:

results = ae.core.perform_bayesian_inference(circuits, freq, Z)

Visualize results¶

Now, let’s take a look at the results. perform_bayesian_inference returns a list of InferenceResult objects. Each InferenceResult object contains all the information about the Bayesian inference, including the posterior distribution, the prior distribution, the likelihood function, the trace, and the summary statistics.

Before we visualize the results, let’s take a look at the summary statistics. The summary statistics are the mean, the standard deviation, and the 95% credible interval of the posterior distribution. The summary statistics are useful for quickly gauging the uncertainty of the parameters.

[9]:

for result in results:

if result.converged:

ae.visualization.print_summary_statistics(result.mcmc, result.circuit)

[P1,R2]-[R3,P4]-R5, 0/1000 divergences ┏━━━━━━━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━┓ ┃ Parameter ┃ Mean ┃ Std ┃ Median ┃ 5.0% ┃ 95.0% ┃ ┡━━━━━━━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━┩ │ P1n │ 9.52e-01 │ 2.11e-03 │ 9.52e-01 │ 9.49e-01 │ 9.55e-01 │ │ P1w │ 2.04e-06 │ 2.01e-08 │ 2.04e-06 │ 2.01e-06 │ 2.08e-06 │ │ P4n │ 9.26e-01 │ 1.48e-02 │ 9.26e-01 │ 9.04e-01 │ 9.52e-01 │ │ P4w │ 2.26e-05 │ 2.19e-06 │ 2.23e-05 │ 1.91e-05 │ 2.64e-05 │ │ R2 │ 3.14e+06 │ 6.74e+04 │ 3.14e+06 │ 3.02e+06 │ 3.24e+06 │ │ R3 │ 4.47e+06 │ 2.13e+05 │ 4.47e+06 │ 4.12e+06 │ 4.82e+06 │ │ R5 │ 1.41e+02 │ 8.24e-01 │ 1.41e+02 │ 1.39e+02 │ 1.42e+02 │ │ sigma.mag │ 9.05e-03 │ 8.83e-04 │ 8.98e-03 │ 7.74e-03 │ 1.05e-02 │ │ sigma.phase │ 1.93e-02 │ 1.92e-03 │ 1.91e-02 │ 1.65e-02 │ 2.27e-02 │ └─────────────┴──────────┴──────────┴──────────┴──────────┴──────────┘

R1-[R2-P3,P4-L5], 0/1000 divergences ┏━━━━━━━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━┓ ┃ Parameter ┃ Mean ┃ Std ┃ Median ┃ 5.0% ┃ 95.0% ┃ ┡━━━━━━━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━┩ │ L5 │ 6.30e-32 │ 5.87e-31 │ 1.73e-35 │ 6.00e-39 │ 6.35e-32 │ │ P3n │ 3.87e-01 │ 1.11e-02 │ 3.88e-01 │ 3.68e-01 │ 4.06e-01 │ │ P3w │ 2.09e-06 │ 1.35e-07 │ 2.09e-06 │ 1.88e-06 │ 2.33e-06 │ │ P4n │ 9.55e-01 │ 4.14e-04 │ 9.55e-01 │ 9.54e-01 │ 9.56e-01 │ │ P4w │ 1.82e-06 │ 3.71e-09 │ 1.82e-06 │ 1.81e-06 │ 1.82e-06 │ │ R1 │ 1.41e+02 │ 2.66e-01 │ 1.41e+02 │ 1.41e+02 │ 1.42e+02 │ │ R2 │ 2.69e+06 │ 5.64e+04 │ 2.69e+06 │ 2.59e+06 │ 2.78e+06 │ │ sigma.mag │ 2.48e-03 │ 2.53e-04 │ 2.47e-03 │ 2.11e-03 │ 2.93e-03 │ │ sigma.phase │ 1.16e-02 │ 1.16e-03 │ 1.15e-02 │ 9.86e-03 │ 1.37e-02 │ └─────────────┴──────────┴──────────┴──────────┴──────────┴──────────┘

Note that some rows have been highlighted in yellow, indicating that the standard deviation is greater than the mean. This is not necessarily a bad thing, but it screams “caution” due to the high uncertainty. In this case, we need to check the data and the model to see if there is anything wrong. For example, the data may contain outliers, or the model may be overparameterized.

Before we investigate the posteriors for individual circuit components for each circuit, let’s take a bird’s eye view of the results, so you have a general feeling about which circuits are generally better, and which ones are worse. For this purpose, we first need to evaluate the circuits based on some common metrics, and then rank them accordingly:

[10]:

# We first need to augment the circuits dataframe with MCMC results

circuits["InferenceResult"] = results

# Now, we can compute the fitness metrics, then rank/visualize accordingly

circuits = ae.core.compute_fitness_metrics(circuits, freq, Z)

ae.visualization.print_inference_results(circuits)

[10]:

Inference results ┏━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━┳━━━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┳━━━━┓ ┃ Circuit ┃ WAIC (mag) ┃ WAIC (phase) ┃ R2 (re) ┃ R2 (im) ┃ MAPE (re) ┃ MAPE (im) ┃ Np ┃ ┡━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━╇━━━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━╇━━━━┩ │ R1-[R2-P3,P4-L5] │ -6.18e+02 │ -3.55e+02 │ 1.000 │ 0.999 │ 4.28e+00 │ 3.87e+00 │ 7 │ │ [P1,R2]-[R3,P4]-R5 │ -4.61e+02 │ -2.83e+02 │ 0.997 │ 0.994 │ 8.22e+00 │ 5.16e+00 │ 7 │ └────────────────────┴────────────┴──────────────┴─────────┴─────────┴───────────┴───────────┴────┘

Now, let’s take one step further and visualize the results. To get an overview of the results, we can plot the posterior distributions of the parameters as well as the trace plots. It’s an oversimplification, but basically, a good posterior distribution should be unimodal and symmetric, and the trace plot should be stationary. In probabilistic terms, this means that given the circuit model, the data are informative about the parameters, and the MCMC algorithm has converged.

On the other hand, if the posterior distribution is multimodal or skewed, or the trace plot is not stationary, it means that the data are not informative about the parameters, and the MCMC algorithm has not converged. In this case, we need to check the data and the model to see if there is anything wrong. For example, the data may contain outliers, or the model may be overparameterized.

Note

For the following cell to work, you need to set interactive=True at the beginning of the notebook. It’s turned off by default since GitHub doesn’t render interactive plots.

[11]:

def plot_trace(samples):

"""Plots the posterior and trace of a variable in the MCMC sampler."""

output = widgets.Output()

with output:

fig, ax = plt.subplots(ncols=2, figsize=(9, 3))

log_scale = bool(np.std(samples) / np.median(samples) > 2)

kwargs_hist = {

"stat": "density",

"log_scale": log_scale,

"color": "lightblue",

"bins": 25,

}

# ax[0] -> posterior, ax[1] -> trace

sns.histplot(samples, **kwargs_hist, ax=ax[0])

kwargs_kde = {"log_scale": log_scale, "color": "red"}

sns.kdeplot(samples, **kwargs_kde, ax=ax[0])

# Plot trace

ax[1].plot(samples, alpha=0.5)

ax[1].set_yscale("log" if log_scale else "linear")

plt.show(fig)

return output

def plot_trace_all(mcmc: "numpyro.MCMC", circuit: str):

"""Plots the posterior and trace of all variables in the MCMC sampler."""

variables = ae.parser.get_parameter_labels(circuit)

samples = mcmc.get_samples()

children = [plot_trace(samples[var]) for var in variables]

tab = widgets.Tab()

tab.children = children

tab.titles = variables

return tab

def dropdown_trace_plots():

"""Creates a dropdown menu to select a circuit and plot its trace."""

def on_dropdown_clicked(change):

with output:

output.clear_output()

idx = circuits_list.index(change.new)

plot = trace_plots[idx]

display(plot)

dropdown = widgets.Dropdown(

description="Circuit:", options=circuits_list, value=circuits_list[0]

)

output = widgets.Output()

dropdown.observe(on_dropdown_clicked, names="value")

display(dropdown, output)

# Default to the first circuit

with output:

display(trace_plots[0])

# Cache rendered plots to avoid re-rendering

circuits_list = circuits["circuitstring"].tolist()

trace_plots = []

for i, row in circuits.iterrows():

circuit = row["circuitstring"]

mcmc = row["InferenceResult"].mcmc

if row["converged"]:

trace_plots.append(plot_trace_all(mcmc, circuit))

else:

trace_plots.append("Inference failed")

if interactive:

dropdown_trace_plots()

The functions defined in the above cell are used to make the interactive dropdown menu. The dropdown menu lets you select a circuit model, and shows the posterior distributions of the parameters as well as the trace plots. The dropdown menu is useful for quickly comparing the results of different circuit models. Running this cell for the first time may take a while (~ 5 seconds per circuit), but once run, all the plots will be cached.

The distributions for the most part look okay, although in some cases (like R2 and R4 in the first circuit) the span is quite large (~ few orders of magnitude). Nevertheless, the distributions are bell-shaped. The trace plots also look stationary.

Now, let’s take a look at the posterior predictive distributions. “Posterior predictive” is a fancy term for “model prediction”, meaning that after we have performed Bayesian inference, we can use the posterior distribution to make predictions. In this case, we can use the posterior distribution to predict the impedance spectrum and compare it with our measurements and see how well they match. After all, all the posteriors might look good (bell-shaped, no multimodality, etc.) but if the model predictions don’t match the data, then the model is not good.

Note

For the following cell to work, you need to set interactive=True at the beginning of the notebook. It’s turned off by default since GitHub doesn’t render interactive plots.

[12]:

def plot_nyquist(mcmc: "numpyro.MCMC", circuit: str):

"""Plots Nyquist plot of the circuit using the median of the posteriors."""

# Compute circuit impedance using median of posteriors

samples = mcmc.get_samples()

variables = ae.parser.get_parameter_labels(circuit)

percentiles = [10, 50, 90]

params_list = [[np.percentile(samples[v], p) for v in variables] for p in percentiles]

circuit_fn = ae.utils.generate_circuit_fn(circuit)

Zsim_list = [circuit_fn(freq, params) for params in params_list]

# Plot Nyquist plot

fig, ax = plt.subplots(figsize=(5.5, 4))

for p, Zsim in zip(percentiles, Zsim_list):

ae.visualization.plot_nyquist(Zsim, fmt="-", label=f"model ({p}%)", ax=ax)

ae.visualization.plot_nyquist(Z, fmt="o", label="measured", ax=ax)

# Next line is necessary to avoid plotting the first time

plt.close(fig)

return fig

def dropdown_nyquist_plots():

"""Creates a dropdown menu to select a circuit and plot its Nyquist plot."""

def on_change(change):

with output:

output.clear_output()

idx = circuits_list.index(change.new)

fig = nyquist_plots[idx]

display(fig)

output = widgets.Output()

dropdown = widgets.Dropdown(

options=circuits_list, value=circuits_list[0], description="Circuit:"

)

dropdown.observe(on_change, names="value")

display(dropdown, output)

# Default to the first circuit

with output:

display(nyquist_plots[0])

# Cache rendered plots to avoid re-rendering

circuits_list = circuits["circuitstring"].tolist()

nyquist_plots = []

for i, row in circuits.iterrows():

circuit = row["circuitstring"]

mcmc = row["InferenceResult"].mcmc

if row["converged"]:

nyquist_plots.append(plot_nyquist(mcmc, circuit))

else:

nyquist_plots.append("Inference failed")

if interactive:

dropdown_nyquist_plots()

/Users/runner/work/AutoEIS/AutoEIS/src/autoeis/visualization.py:165: UserWarning: color is redundantly defined by the 'color' keyword argument and the fmt string "o" (-> color=(0.8549019607843137, 0.4392156862745098, 0.17254901960784313, 1)). The keyword argument will take precedence.

ax.plot(Z.real, -Z.imag, fmt, c=color, markersize=markersize, label=label, alpha=alpha)